March 19, 2024 Winter Goes into Overtime for Midwest and Great Lakes

Calendar says spring but wintry weather continues with busy weather pattern ahead

A.I. skill scores and update about NVIDIA’s Earth-2 Project

Tuesday afternoon warms nicely after a chilly morning across the Eastern U.S.

Next 48 Hours: Continued snowfall across the Great Lakes and Northeast

Day 7 [next Monday] East Coast Hybrid or Subtropical Low Pressure system

Central U.S. powerful storm with potential for significant snowfall. Any precipitation is welcome across the central and northern Plains

Colder air across Western U.S. by Days 8-10

Happy Spring 2024

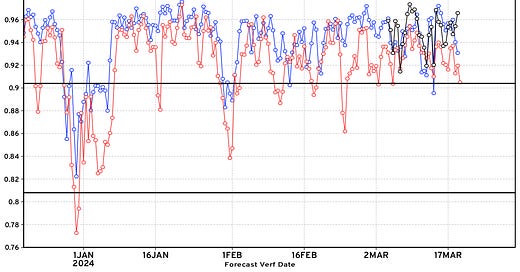

A.I. Skill Scores from ECMWF AIFS and GraphCast-ECMWF

ECMWF AIFS is their internally trained and developed A.I. global model. Data has been provided to the community for the past 3 weeks and skill scores look good (black line) compared to ECMWF and GFS.

The ECMWF IFS and AIFS succeed/fail at different times meaning there is room for improvement for both e.g. using the best model of each would bump up skill scores by 0.01 — or equivalent of a major upgrade cycle — perhaps 3-years worth of skill improvement.

GraphCast using IFS analysis outperforms the underlying IFS system and AIFS by about 0.005. This is a really small difference e.g. 95.2% vs. 94.8%.

There is a more significant gap with GFS: 95.2% vs. 92.5%

But, it should be always noted that 2 or 3 models in a single, multi, or super ensemble with bias-correction and post-processing will provide the best solution in the medium range. Each model has its strengths and weaknesses in different locations. Since these are global systems, we need to take advantage of both ECMWF IFS and NOAA GFS as well as any A.I. trained versions.

NVIDIA Earth-2 Launch

Demo Video

From the Earth-2 website there are many fancy demo videos and descriptions of what can be done with their new system. But what’s going on?

Global ensemble forecasts are generated from FourCastNet (or another technique like GraphCast) with potentially 1,000s of solutions in a matter of seconds or minutes, depending upon the GPU power thrown at the problem.

Pre-trained WRF (or another mesoscale model) is downscaled from ERA5 or operational analysis over the past several years creating a database of higher-resolution modeling over a domain at ~2 kilometers. NVIDIA has experience accelerating WRF and IBM’s GRAF model using GPUs. Thus, generating a few thousand cycles of WRF over a region would be computationally efficient.

The CorrDiff is the magic sauce that links the lower resolution global ensembles with the highest resolution mesoscale WRF data.

The Earth-2 platform, part of the Nvidia CUDA-X microservices software, leverages advanced AI models and the CorrDiff generative AI model to produce high-resolution simulations 1,000 times faster and 3,000 times more energy-efficient than current numerical models.

What is CorrDiff?

we employ a two-step approach Corrector Diffusion (CorrDiff), where a UNet prediction of the mean is corrected by a diffusion step.

In this context, ML downscaling enters as an advanced (non linear) form of statistical downscaling with potential to emulate the fidelity of dynamical downscaling.

A physics-inspired, two-step approach (CorrDiff) to learn mappings between low- and high-resolution weather data with high fidelity.

CorrDiff provides realistic measures of stochasticity and uncertainty, in terms of Continuous Rank Probability Score (CRPS) and by comparing spectra and distributions.

CorrDiff reproduces the physics of coherent weather phenomena remarkably well, correcting frontal systems and typhoons.

CorrDiff is sample-efficient, learning effectively from just 4 years of data.

CorrDiff on a single GPU is at least 22 times faster and 1,300 times more energy efficient than the numerical model used to produce its high resolution training data, which is run on 928 CPU cores.

NVIDIA has practically unlimited GPU power and the techniques and software to throw at this problem. But what will be the ultimate result? My thoughts based upon the last 1-year of global ML/AI weather modeling: this is a very complicated and still computationally expensive system in order to downscale large scale global models and replicate high-resolution mesoscale model ensembles like WRF over rather small domains. While the system can “learn physics” and replicate incredibly detailed weather systems such as Typhoons, it’s not yet clear to me how this system will outperform the underlying NWP model initialized with the best possible initial conditions. Of course, you still need the NWP system to generate the analysis which is based upon advanced (ensemble) data assimilation and a 6-12 hours model integration.

The advantages seem to be running 2-kilometer downscale ensembles out to 15-days at minimal computation expense, generating an enormous repository of data, which may represent the probability distribution of solutions. This could be integrated in climate mode to downscale hundreds or thousands of years for hyperlocal simulations of the next decades due to climate change.

The inferences or A.I. trained forecasts are excellent contributions to medium range forecasting — and Deep Mind has shown progress with ensembles. Same with ECMWF AIFS in ensemble mode. However, capturing extremes and the non-linearities of the atmosphere-ocean coupled system remains elusive for current coarse resolution systems. However, with training data from a global 2-km model (DestinE), generated up front with CPUs and accelerated with GPUs, we may be getting closer to convective scale global forecasting.

Keep reading with a 7-day free trial

Subscribe to Weather Trader to keep reading this post and get 7 days of free access to the full post archives.